Streamline Your Videography Workflow with AI: A Step-by-Step Guide

Introduction

Hi everyone, this is Blake Anderson and I'm a videographer here in Toronto, Ontario. In this blog post, which supplements my YouTube video on the topic, I'm gonna walk you through streamlining your videography workflow using AI integration. So integrating AI into your videography workflow. I'm gonna walk you through my different steps in terms of how I come up with my own videos, using AI at different aspects of my workflow, including the pre-production, production, and post-production. And so this post's gonna walk you through how you can become more efficient, so that you can get to a quicker turnaround in terms of creating videos much more efficiently and enjoying the process more in the process of creating your own video. So stay tuned as I walk you through my workflow, videography workflow, using integration of AI. Have you integrated AI into your video production yet? If not, this guide will show you practical ways to start.

Pre-Production: Enhancing Idea Generation with AI Dictation

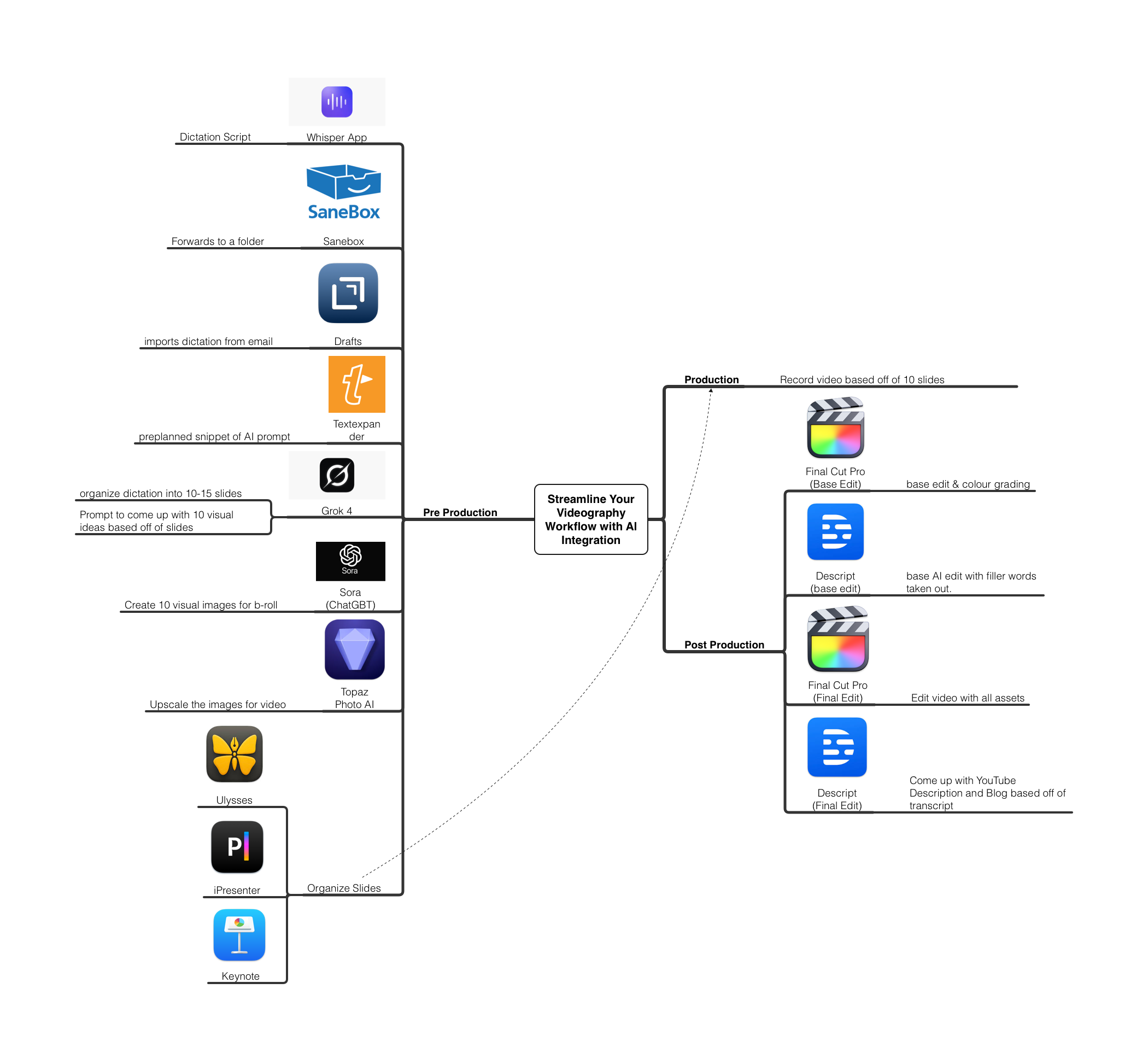

Just to start off, in terms of the pre-production phase, this is where I'll use dictation. So I use an app called Whisper app, and this is an app you can get on your iPhone. And what it allows you to do is it allows you to dictate up to, I believe it's 90 minutes, you can record up to a 90 minute duration and it uses OpenAI. It basically allows you to dictate into the app up to 90 minutes. And then from that it will create readable paragraphs using AI. And this allows you to organize your dictation into readable paragraphs. So that it makes it much more efficient in terms of how you can use these dictations in a later workflow in terms of your AI coming up with your transcript and coming up with your slides.

So I think it's a great way to get out your ideas. Sometimes people like writing and of course you could write out a lot of the what you wanna include in the prompts for the transcript of your video. But I find that if you can get it out at least dictation wise, then you can also refine it as well further with writing. But this is a good starting point. I would definitely recommend using Whisper app, the dictation app, which allows you to record up to 90 minute video, using AI.

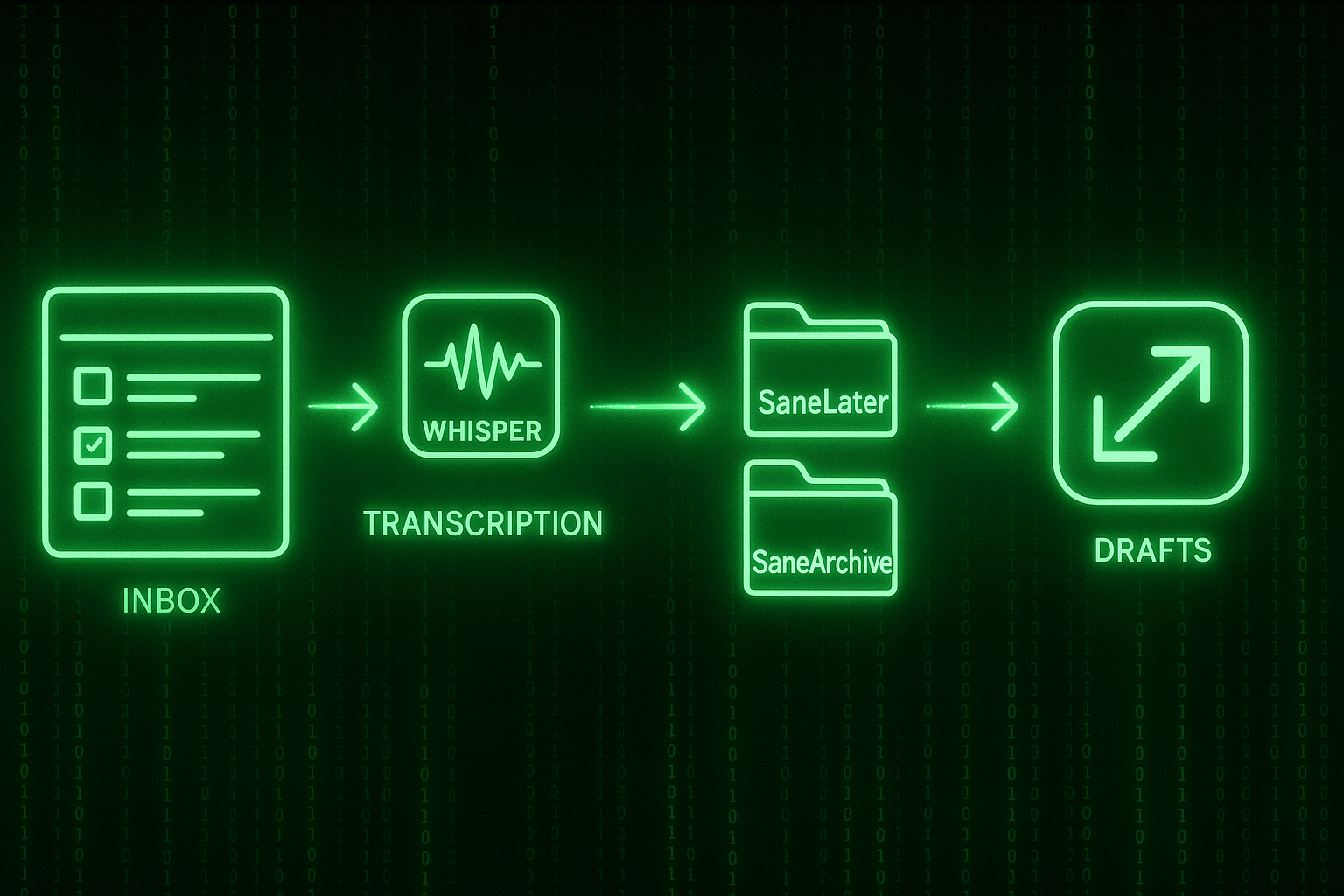

From my Whisper app on my iPhone, it will actually allow you to forward it to an email. So on the Whisper in their settings, you can set up a way in which it forwards to your email. And then what I use is SaneBox, which is a service that you get online. It reads your email database or all your emails coming into your inbox, and allows you to create smart rules and organize your, and it has different aspects to the app, but what I use it for is typically assigning my emails at different folders so that I can quickly come to what information I want, depending on if it's work related or it's my personal or, it has different aspects.

So, but what SaneBox allows you to create a watch folder and then from that watch folder you could organize your dictation. So once they come into your email, it allows you then to forward it to another folder. And then from that folder you can also set up a rule where it forwards it to your drafts. And or other note taking app, but I like using Drafts on my Mac. Drafts allows you to forward your notes to an email, they have on their setup. Once it gets forwarded to this email, Drafts, it will import into Drafts, and then it will, I'll have all my readable dictations in paragraph form which I can further refine with writing and adding any different aspects I want to use within my prompts for my AI.

That's my workflow in terms of at the pre-production where I'm getting out my ideas using dictation, using the Whisper app, which allows it to organize into paragraphs and then it forwards it to my email. I allow SaneBox to forward it then to a further email using Drafts, and then once I have it in my Drafts, I'm further refining the dictation and the writing so that the AI can understand what I'm going after in terms of my transcript for my YouTube video. What AI dictation tools have you found helpful in your pre-production? Share in the comments below.

Utilizing AI for Script and Slide Creation in Videography

And there's different ways to create text expansion on your Mac or PC. A program I've used for many years is Text Expander. So I've uploaded a few different snippets that I commonly use when I'm creating videos. This allows me to not have to recreate each time the different prompts, but generally when I'm creating this prompt, I create basically a prompt saying I want to create a YouTube transcript based off of this dictation.

What I typically like to do is split it into 10 to 15 slides. So I ask the AI to create 10 high level slides, and then I instruct it to come up with a title and three main points based off of my dictation. So it organizes my dictation into roughly 10 to 15 slides, and I allow it to, and ask it to create three high level points based off of my dictation, as well as coming with a title per slide.

And then what that allows it basically to do is, for me at least, some people might want further instructions, like wanna come up with an actual by the word transcript. But I find that if it's at least I have these slides, it allows me to organize my thinking so that I'm much more natural when I speak as I don't like it to be overly prescriptive in terms of what I'm.

But let's just say you have these 10 slides based off of three main ideas per slide, and you ask the AI to create that. Then you can copy and paste. So I use Grok or I've also used ChatGPT, there's obviously Perplexity, there's other apps and AIs out there. But I would encourage you to make sure that you give it a lot of description in terms of the different aspects of what you wanna cover in your video, and then further refine that through your own writing.

Have a very succinct and descriptive prompt that says something like, I wanna create a transcript on my YouTube video. I want to create 10 slides with three main points per slide and have a header title per each slide. It creates 10 slides for me. I'll copy and paste those slides into my note taking app. I usually go for Ulysses or Obsidian, but typically I'm using Ulysses to create these slides.

What allows, so I'm then gonna want to format these slides a little bit more using markdown. Markdown is a way in which to come up with different headers and formatting your text using different formatting languages. So using markdown, I will create secondary headers for each slide title. For each three points, I'll have a third, H3 header. And what you want to do is you want to separate each slide with a dash. So that's, I'm going to explain in the next program, it allows the next program to read the slides so that you can quickly format the slides.

So once I have the formatting correct, using markdown and Ulysses, so that includes having a dash line per each slide having a separation between the header H2 and the H3 main points of the slide. And then typically I'll have the AI come up with a title based off of my dictation. So I'll also have a H1 header and I'll separate that with a also with another dash to ensure that it's its own slide. And so there I have my formatted slides. This AI-driven approach to script creation can transform how you plan videos—try it and see the difference in your efficiency.

Production: Bringing AI-Generated Slides to Life

I'll copy and paste that into iA Presenter. And so that's another markdown-based presenting presentation software that allows you to organize your slides using markdown. And what iA Presenter does is it'll read the markdown that I formatted based off of those 10 slides. And then, because I've formatted each the headers, it will already be formatted that way in iA Presenter. And then typically what I'm doing is I'm gonna change the template. So I'll use the New York style template and ensure that I'm also correcting the font.

Once I have the slides looking the way I want in iA Presenter, I'll export that into a PowerPoint slide. On my Mac, I have it set up so that any PowerPoint slide opens into Keynote. I like that because it allows me to have much more creativity, I would say, and just allows me to format much more efficient. So I really much like Keynote in terms of coming up with video slides. And I have another YouTube video where I walk through my workflow using Keynote. So definitely check that out.

But once I'm opened up my slides that I've exported from iA Presenter into PowerPoint slide format, I'll then open it up into Keynote, and then I have my slides and I'm gonna ensure, like once I'll look over them to make sure that they're everything I want in terms of the formatting. And then I'm going to create an animation of those slides. Typically I'll select all the slides and create an animation. This is a quick way to add simple animations to your YouTube videos so you don't have to spend so much time working in different ways to the different templates you're using or the different title sequences, but this just allows you to do a quick general overview if you just want some slides in your video. Simple text and three main points per idea really drive home key takeaways for your audience.

And so in Keynote, I will usually use this cube animation that allows me to create these slide over effects. Once I have those animated slides the way I want, I will export it into a movie file in Keynote. So allows you that you to have different export functions in terms of the way you want the video to, like which kind of frame rate, and also what kind of codec you want to use. Typically, I am just gonna export it as a ProRes file, 422, as these are usually slides that are very simple, simple animation, simple text, and but using ProRes, it allows your computer much more efficient editing, and the files usually are bigger, but it really doesn't degrade any of the image quality.

So I find if I use ProRes, you could also use ProRes LT if you wanna save a little bit space. But I'm then exporting these slides into ProRes 4K. And then, so that's to the point where now I have a presentation in terms of the main 10 points or so I wanna cover in my video based off of my dictation. And then what I'm gonna do is record the video. I will record the video walking through the slides and my ideas based off the slides. Typically, the way I work is, if I can see the slides I can basically just like speak off the cuff, largely based off the slides, although of course you probably wanna review some of the notes and ways in which you wanna have more information. But this allows me to speak more naturally, based off of the dictation that I already created earlier. How do you prefer to record—scripted or off the cuff? Let me know!

Post-Production: AI Editing and Visual Enhancement for Professional Videos

Once I've recorded the video, then I'm going to add it to Final Cut Pro. I'm gonna do some basic color grading in Final Cut Pro. And then I'm going to maybe cut out some of the spaces if I had to do any retakes. But typically these are very basic editing that I'm gonna do, and mostly I'm gonna color grade. Some people might color grade at the end, but I find if I've already shot it in flat, so what I want to do is I'll typically convert it using a Rec 709 color space, with a LUT. Then I will do some basic color grading using Magic Bullet Looks and adding a bit of noise. And then I'm gonna export that in H.264.

Once I have a file that's my basic run through of my video, I'm then going to add it to Descript. And so using Descript as a software that allows AI to, to use AI to basically use editing that you can edit using the transcript. And they also have some other different AI features as part of the program, and I won't go into depth about this software, but sufficient to say is that I will import it with my H.264 file. I'll then allow the AI to cut out all the ahs and ums and any filler words or any ways in which there might have been some space in the speaking.

And I would say that the AI does a really good job typically of cutting out all the ahs and ums. It gives you a more smooth line, fast tracking your editing process, and makes you look more presentable and professional. This is what I'm using as the AI feature. You can obviously go through line by line and correct each suggestion that the AI gives you, but often I'm just trusting that I'm putting it medium quality and then allowing the AI to. You can select all the cuts, and then it allows the AI to cut out all those ahs and ums automatically. And then there's an aspect where you can also cut out the spacing and any of the filler words.

So there I have an edited version of my video with some of the filler words and so the ahs and ums. And then what I'm gonna do is I'm gonna export that in Descript. The export I do is using its highest like the highest quality function. So that's not degrading the image at all. And then but I'm exporting that in H.264 file again onto my MacBook. Once I have that, I have my edited video, my audio that I've already obviously recorded. I have my 10 slides or 15 if they are 15. And then I'm going to put all that together into a timeline. My Final Cut Pro timeline.

What I typically do is I'll copy and paste those the content of the slides into my LLM. So typically it's using Grok or ChatGPT. And then I'm asking, using now again another text expander prompt to based off of the script. I can copy the transcript from Descript, copy the text from the, you can also create a blog based off of Descript. But then I can create all that content and then also can copy my slides and just ask the chat to or the Grok to come up let's say 10 different visual ideas so that you can use different LLMs to generate 10 images based off of your slides.

It gives you different ideas in terms of different prompts. Of course, you could do this yourself and sometimes I am doing that myself if I'm just looking for a quick picture. I find that the AI generally gives good ideas in terms of illustrating to your audience some of the concepts so that they really get it. Some suggestions or pro tips would be to consider not allowing for words in your visual, you can come up with 10 different visual ideas, different prompts.

Then I will go through and create 10 different images and maybe 20 if I want duplicates or similar visuals based off those 10 ideas. So then I have a series of different images you can also do video and there's Midjourney. I know ChatGPT has theirs as well as Grok. So there's different ways to generate these images. I'm typically using ChatGPT, creating their visuals through them.

Then I'm going to import this into Final Cut Pro and I can quickly create a slide of these 10 or 20 images that I have. And so I'll create a slideshow basically. 20 images, and I'll do a transition between the slides and make it look more professional. You could make a border around them, but I have my presentation. I have myself speaking on camera with an inter version from Descript. I also have visuals from the concepts that AI created.

Some people might want to incorporate more of their own creativity or insights in terms of coming up with the ideas themselves. But I find that as long as you're allowing AI to base the images and the presentation off of your original words, it does a good job of allowing you to free up some of that time and space you would've typically been researching in terms of coming up with B-roll or visual ideas. As a lot of us know, the audience can get bored if they don't have a lot of images to look. And so just speaking on camera at times can get a little bit, let's say boring or not as engaging as if you have some B-roll or some visuals to go along your presentation.

So this is my workflow. Once I've done a basic edit in Final Cut Pro based off of all these different you know, slides and visuals. I'll also use the Magic Mask in Final Cut Pro. I'll select myself. And because I'm typically away from the wall, the AI can quickly create a mask of just myself. That way I can take the background out of the image. Once I have the background out, I'm able to put the slides behind me. I can go through a bit of my workflow, but I am creating two timelines.

So I have myself, and then I have a version of myself already in the room. And then I can cut between myself in the room and the slides behind me or the images behind me with an animated background perhaps. There's different creative ideas you can come up with, but I think this just allows you to still be on camera when you're speaking, but then also have the slides behind you or beside you, and sort of allows the audience and the viewer to focus on your words and your main ideas and the key takeaways. It gives you a cool way to incorporate your slides and visuals. It's not distracting for the viewer, but it actually enhances the education and also the entertainment aspect of your video.

So that's what I'm doing. Once I've created an edit version of that in Final Cut Pro, I'm gonna then export it. I'll also sometimes put it one more time into Descript, and so that it reads the file, it comes up with, you can create a feature in there where you can ask it to create a YouTube description. That's what I'll typically do. So it'll have a paragraph with a title and a description of the YouTube video, as well as actual chapter markers. This would've taken you 20, 30 minutes in the past to come up with your own description title, and chapter markers. But using Descript, I found that this workflow typically works for me. My workflow is I'll hop into a YouTube description. I will then create a thumbnail, and from there I have a very much more engaging video with allowing AI to do some of the work for me.

Conclusion

In this post, I just walked you through my workflow of using AI in different aspects of my production and post-production. As a videographer, integrating AI into your workflow can elevate both your efficiency and creativity. This guide is a snapshot of my journey optimizing each video production stage with AI support. For more insights into creative workflows using AI, feel free to check out my other resources and videos. If you did like this post and the accompanying video, please like and subscribe. Thanks, and have a great day! What parts of this AI videography workflow will you implement first? I'd love to hear your experiences.